Threat hunting is most effective when teams pivot from chasing short-lived indicators of compromise to understanding attacker behavior. By centering on tactics, techniques, and procedures mapped to MITRE ATT&CK, you can generate fresher, higher-confidence IOCs as by-products of real activity, reduce noise, and speed investigations.

Every security team knows the frustration: you get a fresh batch of indicators of compromise from your threat intelligence feed, load them into your SIEM, and wait for alerts. When they come, half are false positives from legitimate business traffic, and the other half point to attacks that happened weeks ago, long after any damage was done. Meanwhile, the real threats slip through using techniques you’ve never seen before, with infrastructure that won’t appear on any IOC list until it’s too late.

This is the fundamental problem with IOC-centric threat hunting. While indicators like IP addresses, file hashes, and domain names serve an important role in cybersecurity, building your entire hunting program around them is like reading yesterday’s newspaper. Attackers rotate infrastructure faster than you can blacklist it, and by the time an IOC makes it to your feed, the campaign has likely moved on. This blog explores a better approach: behavior-first threat hunting that focuses on tactics, techniques, and procedures (TTPs) mapped to the MITRE ATT&CK framework. We’ll show you how this shift not only generates fresher, higher-confidence indicators as natural by-products but also reduces false positives, speeds investigations, and creates detections that actually last.

IoCs vs TTPs

According to NIST, indicators of compromise are technical artifacts or observables that suggest an attack is underway or has occurred. Typical examples include IP addresses, domains, URLs, file hashes, and registry keys. These indicators make effective evidence and search pivots for retro hunts and block lists, but they are transient and often noisy when sourced from generic feeds without strong context, confidence, or time-to-live metadata.

Tactics, techniques, and procedures (TTPs) describe adversary behavior in varying levels of detail. The MITRE ATT&CK knowledge base organizes these behaviors from real-world observations, giving threat hunting teams a common taxonomy for detection engineering and investigation. Examples include OS credential dumping, remote-service lateral movement, and application-layer command and control. Because behaviors reflect how compromises actually unfold, they persist across infrastructure changes and support longer-lived, higher-confidence detections.

Instead of starting with generic threat indicators, effective threat hunting teams hunt for known attack patterns first, then create their own custom indicators based on what they actually find in their network. When they find evidence of these behaviors during their investigation, they extract relevant indicators (like file hashes, IP addresses, or command signatures) that are specific to their own environment. These custom indicators are then used to improve future alerts and strengthen security controls.

Why Do IoCs Go Stale?

In theory, IoCs should tell you everything you need to know to easily block attackers. The problem? Attackers constantly switch their infrastructure (new cloud servers, new domains, new proxies) while malware creators pump out variations of the same tools with different signatures. Even well-curated lists can drown teams in weak matches that burn time without improving outcomes. The problem is not that IOCs are completely useless; it is that most IOCs are isolated and divorced from your environment and your active threats. The solution is to generate IOCs from live adversary activity that is already tied to the behaviors you care about and the assets you must protect.

To keep your IOCs useful, manage them properly. Track when each indicator was first and last seen, how reliable the source is, and when it should expire. Give more weight to indicators you’ve seen in your own network than to generic threat intelligence feeds. Furthermore, enriching candidates before promotion with context such as passive DNS, TLS certificate details, JA3 or JA4 fingerprints, process lineage, and the observed ATT&CK technique takes this up a notch.

Test indicators against your own data first. If they’re relevant, automatically push them to your security tools (SIEM, XDR, and SOAR) with clear response playbooks. Measure how well they work according to their accuracy, investigation time, and number of false positives. Drop the feeds that aren’t helping. For a deeper dive into keeping your threat intelligence fresh, check out this blog.

What is Behavior-Based Threat Hunting?

When threat hunting is behavior based, analysts start from hypotheses like “adversary will harvest credentials from these endpoints” or “adversary will move laterally using remote services” rather than starting from their most recent IP list. Using these behavior-led hypotheses helps to get in front of likely attacker moves even before indicators appear. The approach improves hypothesis quality, focuses logging on the data that matters, and raises detection fidelity.

It also makes investigations faster because behaviors carry built-in context. For example, if you confirm credential dumping on a host with recent abnormal authentication activity, you can immediately scope impact, pivot to adjacent assets, and generate the real-time, specific IOCs from what you observed. In parallel, teams can validate coverage against expected techniques, place controls at likely choke points, and tune analytics ahead of incidents.

Behavior-based threat hunting also improves automation. When you tag events with ATT&CK techniques, your security tools can correlate data better and respond automatically. Since you’re working with higher-quality, environment-specific indicators, you get fewer false positives and faster response times. Schedule regular hunts for your top threat techniques, test your defenses, and fix gaps proactively. This creates a cycle: observed behaviors improve your indicators, better indicators strengthen your defenses, and stronger defenses give you cleaner data for the next hunt.

How To Generate IoCs that Actually Help with Threat Hunting

Start by defining scope and threat priorities. Identify crown-jewels, high-value identities, and the ATT&CK techniques you most expect to see. Build isolated, high-fidelity deception environments that mirror those assets. Set success metrics: fewer false positives, faster threat detection, and more detections from your own observations than from generic feeds.

It is essential to make these deception environments credible. Seed them with synthetic credentials, realistic file shares, business-looking documents, and SaaS breadcrumbs that reflect real workflows. CounterCraft The Platform makes it simple to rotate lures and environment elements on a schedule, vary names and locations, and script normal user activity so nothing feels static.

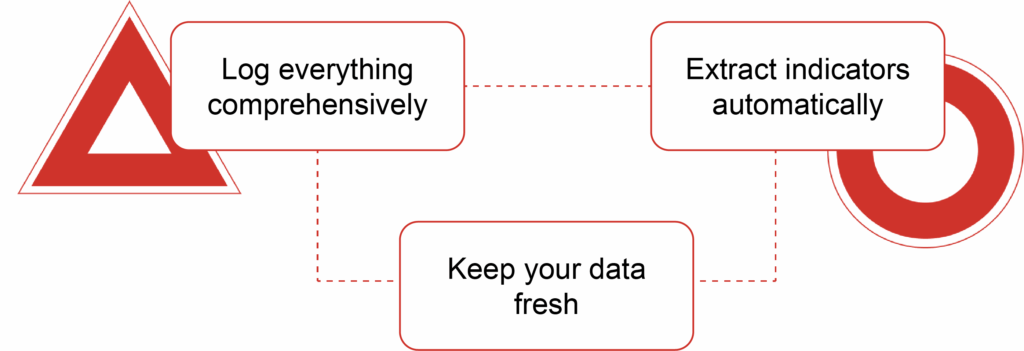

Log everything comprehensively. Record all system activity, from processes to network connections, login attempts, and file access. Tag each event with the relevant ATT&CK technique and affected system. Keep this separate from your production environment and store it so analysts can easily trace from suspicious behavior to the technical details.

Extract indicators automatically. When you confirm an attack technique, generate IOCs from what you observed: file hashes, IP addresses, registry changes, command lines, and process relationships. Add reliability scores and timestamps, then push these to your security tools with clear response instructions.

Keep your data fresh. Set expiration dates for threat intelligence feeds, remove low-value rules, and maintain a list of known false positives. Make “what should we retire?” a regular discussion in your security meetings. Update your test environments and priorities as your infrastructure changes. This cycle produces better detections, fewer false alarms, and faster investigations.

Case Study: Behavior-Based Hunting to Detection

A global enterprise set up a deception environment that looks like a real finance network with realistic computers, file shares, and fake credentials for their billing system. When attackers take the deception lure, in the form of phishing bait, they try to steal credentials and explore the fake network. CounterCraft allows security analysts watch this happen in real-time, map the attack to MITRE ATT&CK techniques, and extract IOCs from the actual attack… everything from file hashes to malicious domains. These high-confidence indicators get prioritized in their security tools, helping them quickly block the real attack and catch similar techniques faster next time. The value came from observing how the actor moved, then letting the right IOCs fall out automatically.

Key Takeaways

Here’s how to make behavior-based threat hunting work:

- Hunt for attack behaviors first, then extract IOCs from what you actually observe

- Label everything with MITRE ATT&CK techniques so your team speaks the same language

- Prioritize indicators from your own environment over generic threat feeds

- Regularly clean house, measuring what works and eliminating what doesn’t

- Use realistic decoy environments to safely study attacker behavior

The bottom line: IOCs are useful when they come from real attacks you’ve observed. Focus on understanding how attackers behave, and you’ll naturally generate better indicators, reduce false positives, and respond faster.

Indicators of compromise tell you what happened yesterday. CounterCraft’s deception-powered threat intelligence shows you what is happening now. By channeling intruders into lifelike digital twins and capturing their tactics in real time, you get adversary-driven, zero-noise signals mapped to ATT&CK, ready for SIEM and SOAR, and strong enough to support DORA and CBEST evidence packs. This is the step beyond reactive lists to live, preemptive, specific intelligence, which advances your cybersecurity ecosystem

If you would like to see how this approach can be tailored to your stack and priorities, schedule a personalized demo.